This is a great question and something we need to discuss internally to see if there’s a decent solution. I would like OpenBoxes to be usable on a LAN but we have not made any effort to ensure that it can.

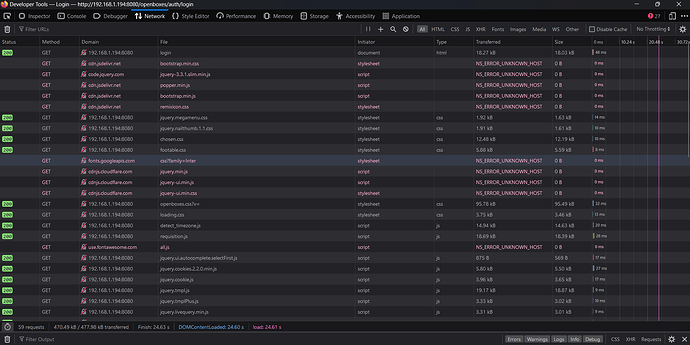

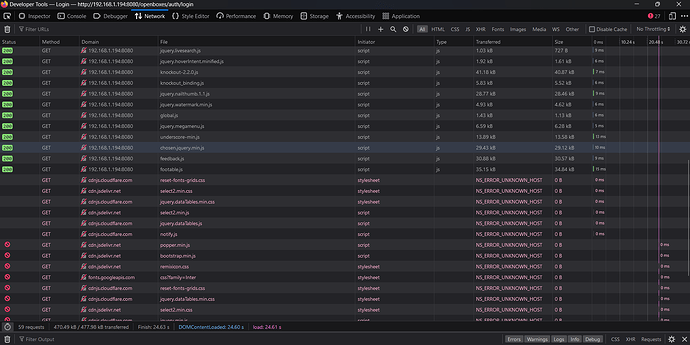

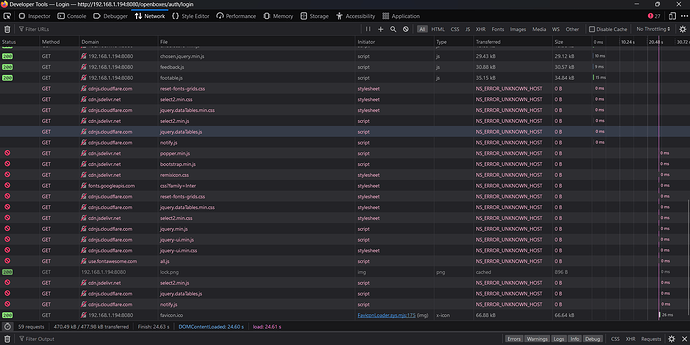

At the moment, you probably won’t be able to run OpenBoxes via the browser on computers that have never had access to the Internet since there are resources (javascript, CSS, fonts, etc) that are being pulled from CDNs when pages are loaded for the first time (as @David_Douma suggested).

You are currently in a position where OpenBoxes on your computer mostly works for you because you were previously connected to the Internet. In other words, those resources were downloaded and cached by your browser. As soon as you clear your cache you’re going to start seeing problems.

We can definitely pull all resources down into the WAR file and forgo the need for CDNs. We just haven’t prioritized that.

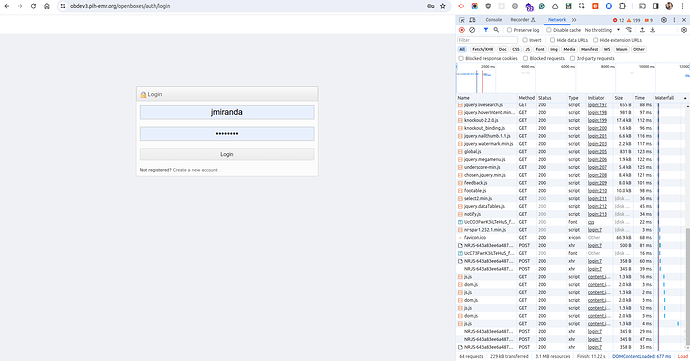

The particular issue regarding slowness is related, but probably a slightly different problem which you should be able to confirm easily by using the browser’s Inspect feature.

The first time you load a page you’ll get all resources downloaded from their respective sources (local server, CDNs, etc)

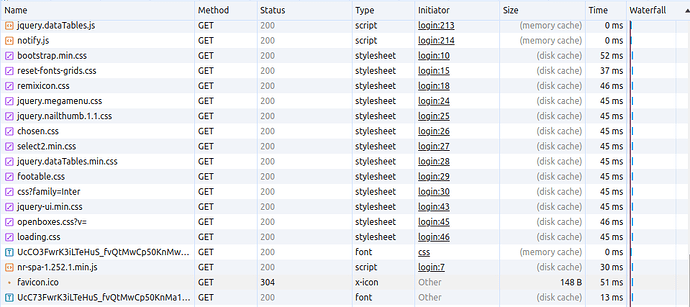

On subsequent page loads, you’ll notice a bunch of resources under the Network tab with “(memory cache)” or “(disk cache)” in the Size column. These are the ones that’ll be fine for a period of time since they’ll continue to be loaded from the browser cache. But once you clear the cache, then you’ll need the internet again for anything that has been downloaded from a CDN.

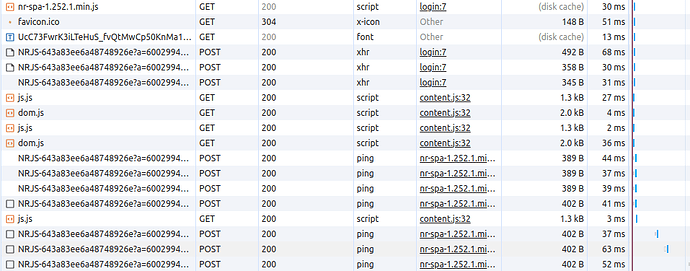

In addition, you’ll also notice a bunch of resources that continue to be downloaded each time (nr-spa-1.252.1.min.js) along with requests that are made to services we use for application monitoring and .

For example, the POST request to NRJS-643a83ee6a48748926e is sending metric data to NewRelic to help us identify performance issues.

POST https://bam.nr-data.net/events/1/NRJS-643a83ee6a48748926e

a = 600299440

v = 1.252.1

to = MwAGY0tXWkQFUUdaWwpKS1hJU1pVC0pWQBsFEBBfFlpbUA1c

rst = 828

ck = 0

s = e94758a5c21d5205

ref = https://obdev3.pih-emr.org/openboxes/auth/login

ptid = 5ff39916-0001-bfee-cd38-018e33ce095e

hr = 0

As far as I recall, most of these features (HotJar) are disabled by default, so they should not be causing you any issues. That is, except for the New Relic requests. But, I was told by a developer that these requests would not impact performance as they should be happening asynchronously.

I’ll try to add this topic to our tech huddle for tomorrow and see if we can come up with a strategy and perhaps a short-term fix. If you can pinpoint the slow-loading requests then we might be able to get a fix in before the next release.

Here’s how to take a screenshot showing all requests being loaded for a particular page.

- Ensure Internet is not enabled on your local machine

- Open your browser

- Navigate to OpenBoxes login page

- Right click anywhere in the page

- Select the Inspect option

- Click on the Network tab

- Refresh the login page (in order to download the resources for that page)

- Take a screenshot

The most important data to capture (besides the Name) will be Time, Size, and Waterfall, so please make sure those columns and their values are visible.

You can also download the full log by clicking the download arrow (Export HAR…) in the top bar of the Network tab.